Forensic-Grade Smile Detection with Light Image Resizer and AI Vision

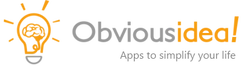

We are pleased to announce a new build of Light Image Resizer, incorporating extensive testing of the AI Vision feature as a smart, batch-capable image tagger. You can consult the full version history here. This article walks you through a concrete use case: configuring a preset that processes an entire folder of photographs and automatically detects the presence of a human subject along with a scientifically grounded smile intensity score. The methodology is inspired by the Facial Action Coding System (FACS) developed by Paul Ekman, which makes the output reproducible, structured, and suitable for professional or forensic applications.

The Science Behind the Detection: FACS and Action Units

The Facial Action Coding System, originally developed by Swedish anatomist Carl-Herman Hjortsjö and later adopted and expanded by Paul Ekman and Wallace V. Friesen, is the international standard for describing facial muscle movements. Ekman, a psychologist and professor at the University of California, San Francisco, is recognized as one of the pioneers in the scientific study of emotions and their relationship to facial expressions. The system decomposes any facial expression into discrete Action Units (AU), each corresponding to the contraction of one or more specific muscles. For smile detection, the two critical units are AU6 (Orbicularis Oculi, cheek raiser) and AU12 (Zygomaticus Major, lip corner puller). A genuine Duchenne smile requires both AU6 and AU12 to activate simultaneously. A voluntary or posed smile typically involves AU12 alone. This distinction is precisely what the AI Vision prompt is designed to detect and report.

Use Case: Batch Analysis of 1500 Photographs for a Legal Investigation

To illustrate the practical scope of this workflow, consider the following scenario. A legal investigation requires reviewing a collection of 1500 photographs to determine whether a human subject was exhibiting distress or a relaxed expression during a photoshoot session. Manually reviewing 1500 files is time-consuming and subjective. With Light Image Resizer configured as described in this tutorial, the entire batch is processed automatically, and each file receives a structured, machine-readable tag written directly into its metadata. The tag is generated by an AI vision model instructed to act as a forensic facial expression analyst. The result looks like this:

OLLAMA-MISTRAL-FORENSIC32-EXPERTV2:LATEST

[NO_SMILE:100]

No AU12 or AU6 activation detected. Neutral facial expression.The first line identifies the model used. The second line is the standardized tag with a confidence score expressed as an integer in steps of ten. The third line provides a brief technical justification based on muscular analysis and artifact detection. This three-line output is repeatable, parseable, and auditable.

You can download the ready-to-use preset for this use case here:

Download the Forensic Smile Detection Preset

Why Run the Model Locally with Ollama

Privacy is a primary concern in any forensic or legal context. Uploading photographs of individuals to a third-party cloud API introduces risks that are unacceptable in sensitive investigations. Light Image Resizer now supports Ollama, which allows you to run vision language models entirely on your own machine, without any data leaving your network. There are no API costs, no usage limits, and no dependency on an internet connection. For this use case, running locally is not just a preference — it is a requirement. You can learn more about the Ollama integration introduced in Light Image Resizer on the official product page.

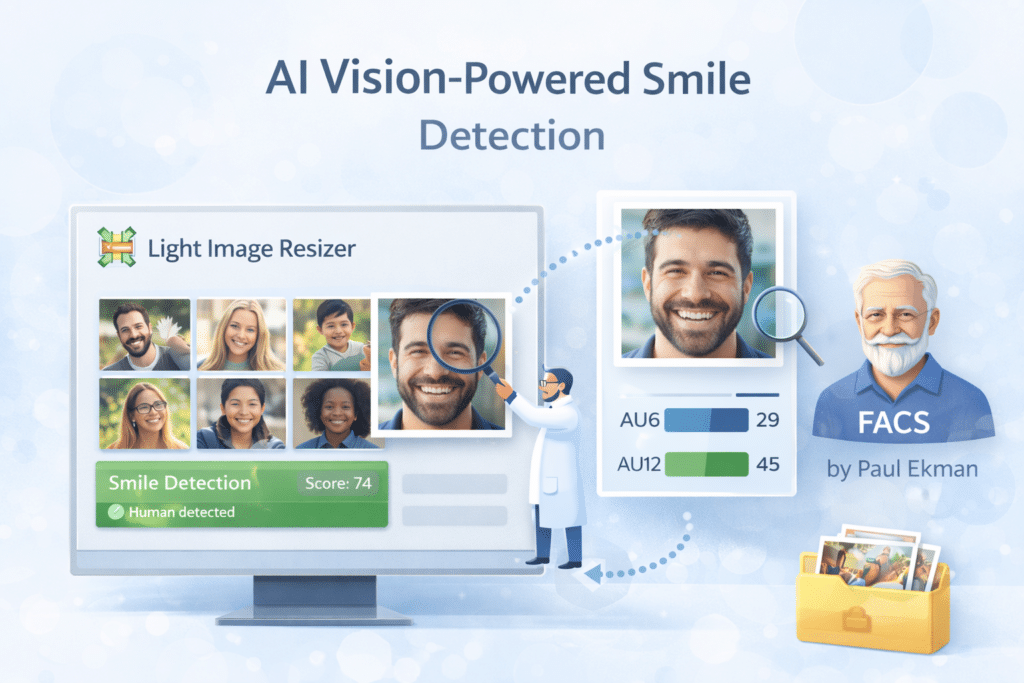

Configuring the Preset: General and File Type Settings

The key constraint in this use case is that the source images must not be altered. The preset is therefore configured to write only metadata, leaving the pixel content of each file completely untouched. In the General tab, set the action to Replace original and the destination to the same folder as the original. In the Advanced tab, the filter can remain at Lanczos and the policy at Always resize, but what matters is the File Type section: set Format to As Original, and under Compression, enable the Keep original quality option. This ensures that Light Image Resizer processes each file solely to write the AI Vision result as a comment in the image metadata, without re-encoding or degrading the image in any way. Resolution can be left at 96 DPI since it has no effect when the format is preserved as original.

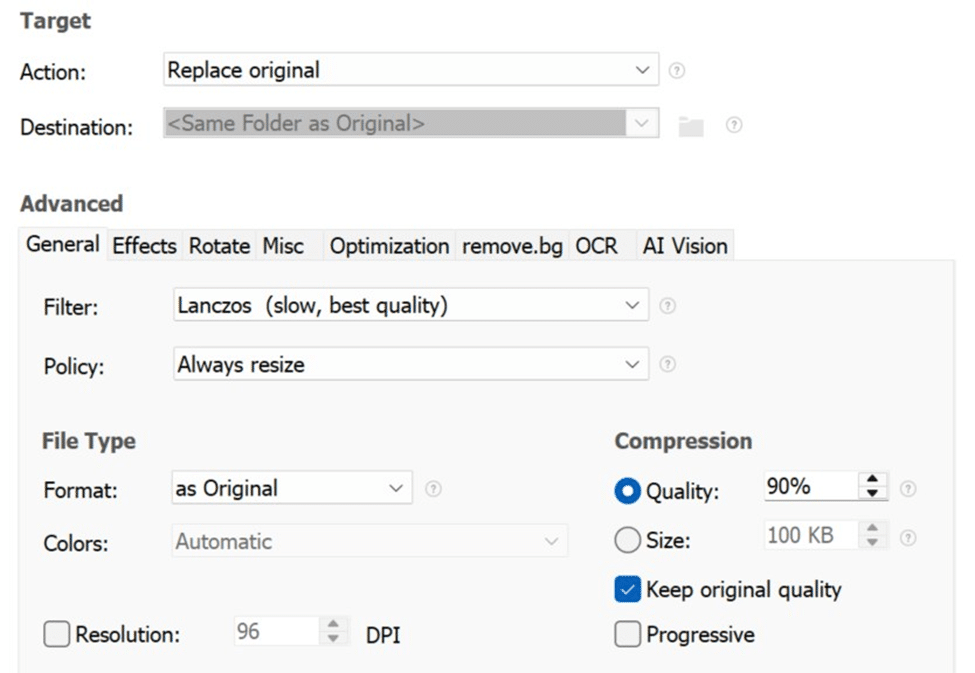

Configuring the AI Vision Tab

Open the Advanced panel and navigate to the AI Vision tab. Enable the feature using the checkbox at the top of the panel.

In the Service dropdown, select Ollama for fully local processing. If you prefer a cloud-based model, Gemini (Gemini 3 Flash) and ChatGPT (GPT-5 Mini) are also supported and require only that you enter your API key in the Config panel. Set Max Size to 896 pixels. This resolution is sufficient for facial analysis in the vast majority of photographic subjects and keeps processing time reasonable across large batches. For the Policy field, Append will add each new AI result to the existing comment field without erasing previous entries, which is useful when you run the same batch through multiple models for comparison. Replace will overwrite the comment field each time.

The Forensic Prompt

Paste the following prompt into the Prompt field of the AI Vision tab. Every element of this prompt is intentional. The system instruction eliminates conversational text from the output. The Muscular Decomposition Protocol directive focuses the model on AU6 and AU12. The artifact detection clause prevents misclassification caused by beards, fingers, cigars, or other occlusions. The thesaurus enforces a fixed vocabulary, making batch results directly comparable and searchable.

[SYSTEM] Act as a forensic facial expression analyst. Your mission is to translate facial muscle activity into a standardized tag with a confidence score. No prose. No conversational fillers. No 'think' tags. Apply the Muscular Decomposition Protocol (AU6/AU12) and identify mechanical artifacts (beard, cigar, finger) before concluding.

[OUTPUT STRUCTURE] Your response must consist of exactly three lines:

Line 1: %AISERVICE%-%AIMODEL%

Line 2: [TAG:SCORE]

Line 3: Brief technical justification (muscles vs artifacts).

[STRICT RULES]

1. SCORE: Must be an integer representing confidence from 0 to 100, strictly in steps of 10 (e.g., 60, 70, 80).

2. RELIABILITY GATE: If confidence is below 50, use the tag [UNSURE:SCORE] instead of a standard tag.

3. TAG SELECTION: Choose exactly one term from the THESAURUS below.

4. SYNTAX: Do not insert any characters, colons, or brackets between the TAG and the SCORE other than the specified [TAG:SCORE] format.

[THESAURUS]

NO_SMILE

MICRO_SMILE

SMILE

BROAD_SMILE

LAUGHING

UNSURE

[VALID EXAMPLE]

%AISERVICE%-%AIMODEL%

[UNSURE:90]

Artifact detected (beard shadow), insufficient AU6 activation.

verdict:Processing time varies considerably depending on your GPU, the amount of VRAM available, and the model selected. On a system equipped with an NVIDIA RTX 3090 or 4060 Super, expect between 5 and 90 seconds per file. Models worth testing for this task include Qwen2.5-VL, Mistral Small 24B, and Gemma 4B for faster throughput on constrained hardware.

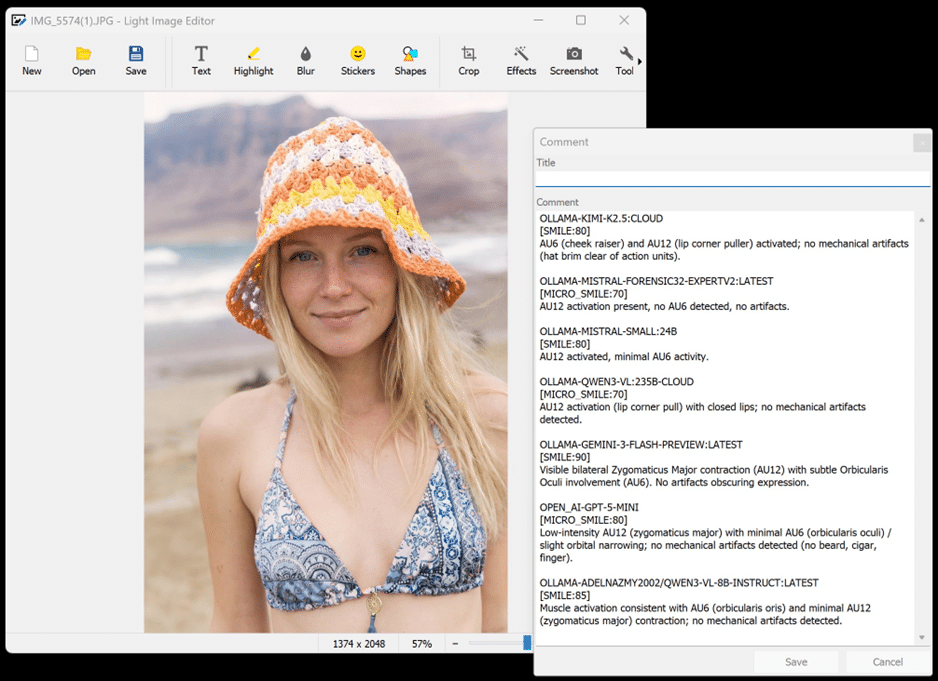

Reviewing Results in Light Image Editor

Once the batch has finished processing, open any file in Light Image Editor, which is included with Light Image Resizer. Navigate to Tools in the top menu and select Comment, or press Ctrl+T. The comment field will display the structured output from each model that was run against that image.

In the example shown above, the same photograph was analysed by seven different models, including a custom fine-tuned Mistral variant, Qwen3-VL 235B, Gemini 3 Flash Preview, GPT-5 Mini, and Kimi K2.5. The results vary in their precise classification between MICRO_SMILE and SMILE, but all agree on the absence of distress indicators and the presence of some degree of Zygomaticus Major activation. This kind of multi-model comparison is valuable when calibrating which model best suits your hardware constraints and your required output language. If you need results in French, Spanish, German, or another language, choosing a multilingual model such as Qwen or Mistral will produce the justification text in the target language without any modification to the prompt.

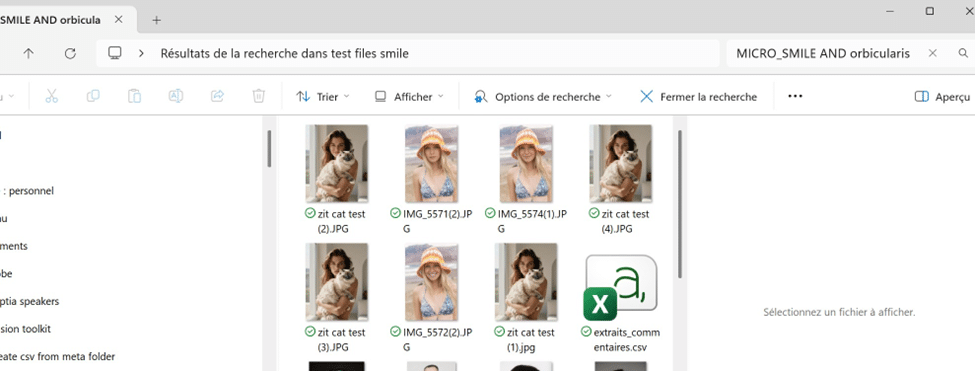

Searching Your Photo Library by AI-Generated Tags

After processing a batch, the structured tags written into each file’s metadata become immediately searchable from Windows Explorer. Open the folder containing your processed images and use the search bar to enter a keyword or a combination of keywords. ( See top right corner of the screenshoot )

Windows Explorer supports AND and OR operators in the search field. In the example shown, the query MICRO_SMILE AND orbicularis returns only the files where the model detected a low-intensity smile with documented orbicularis involvement. This transforms your local photo library into a structured, searchable evidence archive. The same approach extends to entirely different domains. You could write a prompt that identifies the country or city visible in a landscape photograph, classifies vehicle models, describes room configurations for real estate documentation, or analyses any visual attribute relevant to your professional context. The prompt is the only element that needs to change.

Exporting Metadata to CSV for Aggregate Analysis

For investigations requiring a statistical overview of an entire collection, a Python script is available on request that extracts the comment metadata from each processed file and consolidates it into a single CSV file. This CSV can then be imported into any spreadsheet application or submitted to a language model for higher-level pattern analysis, such as identifying the proportion of images containing genuine smiles versus neutral expressions across a timeline of photographs. To request the script, contact us with the subject line: AI Vision Smile python script.

Conclusion

Light Image Resizer provides a complete pipeline for forensic-grade facial expression analysis at scale. The combination of a structured FACS-based prompt, a locally hosted Ollama vision model for full data privacy, and the direct writing of results into image metadata creates a workflow that is reproducible, auditable, and deployable on any Windows machine with a capable GPU. The same infrastructure adapts readily to any domain that benefits from automated, expert-level image annotation. Download Light Image Resizer and the forensic smile detection preset to get started.